Summarised by Centrist

The new ChatGPT o1 model can “reason” like a person, solving complex problems. While this is great for users, it is also a potential goldmine for cybercriminals.

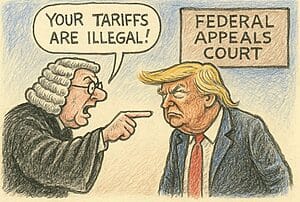

Crooks could potentially exploit the AI’s ability to mimic human-like conversations, making scams more convincing and harder to detect.

Security expert Dr Andrew Bolster stated, “Where this generation of LLM’s excel is in how they go about appearing to ‘reason’… Lending their use to romance scammers or other cybercriminals leveraging these tools to reach huge numbers of vulnerable ‘marks’.”

AI-powered scams are projected to lead to a dramatic increase in fraud losses over the next few years. The risks are real for criminals to carry out cheap, large-scale scams, costing as little as one dollar per hundred responses.

To address the potential for skyrocketing AI-powered scams, OpenAI said they test how well models continue to follow safety rules if a user tries to bypass them (called “jailbreaking”), but no system is foolproof. As tech journalist Sean Keach put it, “AI is here to stay. There’s no doubt about it… The responsibility will be on you and me to stay safe in this scary new world.”

Users are advised to remain vigilant. Tips include being sceptical of deals that seem too good to be true, avoiding unsolicited links, and consulting trusted friends or family members when something feels off.